How to create a CVAT benchmark

This guide explains how to create a CVAT benchmark within the platform.

How to Create a CVAT Benchmark for Auto QA Evaluation

Steps to Create a CVAT Benchmark

1. Prerequisites

- You must have a project utilizing CVAT.

- A dataset is required for evaluating the project.

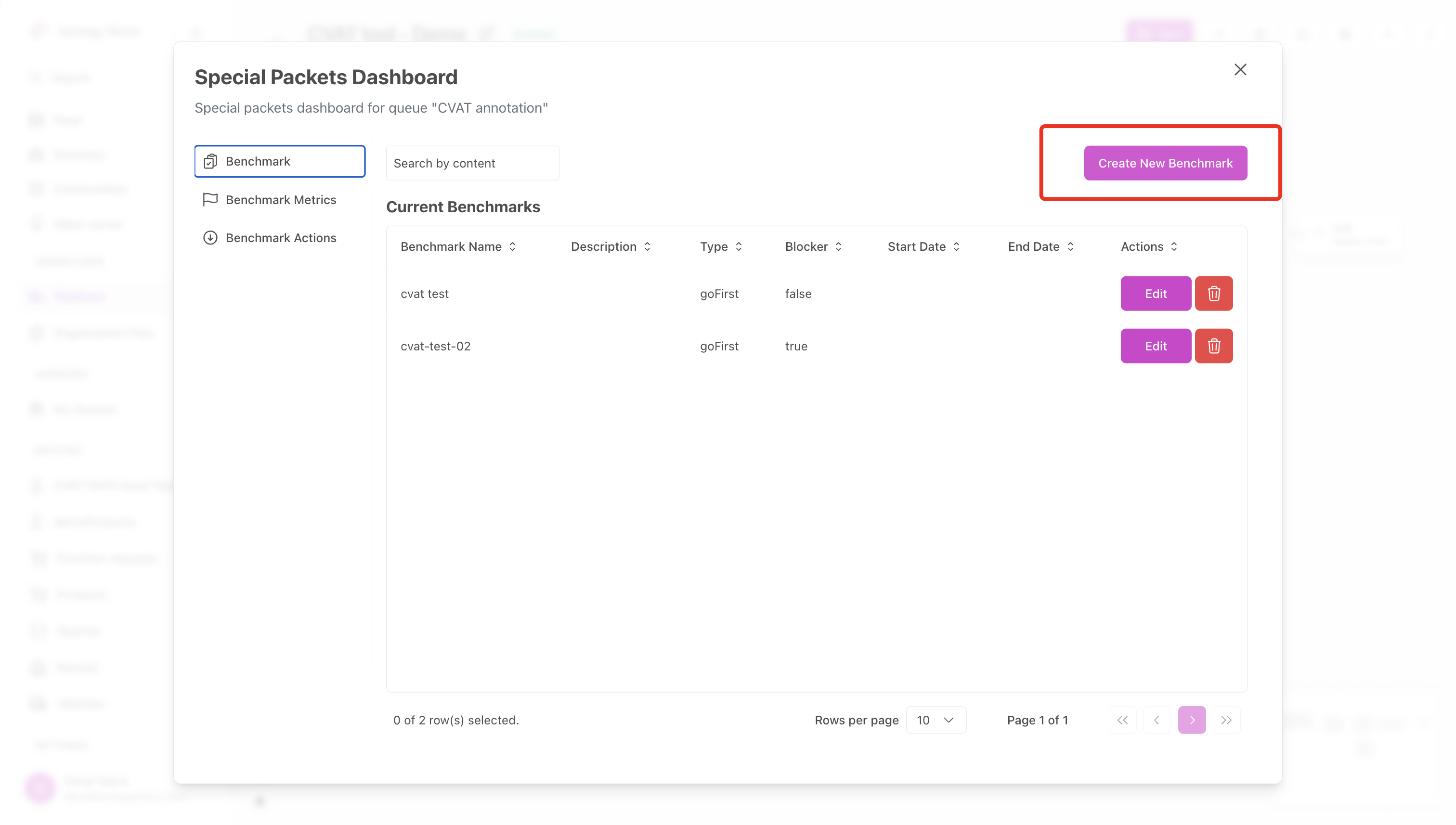

2. Open the Benchmark Management Modal

- Navigate to the section where benchmarks are managed.

- Click the "Create New Benchmark" button.

3. Fill in the Benchmark Details

- Provide the following details:

- Name: Enter the benchmark's name.

- Description: Add a short description of the benchmark.

- Type of Benchmark: Specify the benchmark type.

- Blocking Benchmark: Indicate whether this benchmark blocks taskers.

- Start Date/End Date: Include these dates if applicable.

- Enabled: Specify whether the benchmark is enabled or not.

4. Configure Input/Metadata/Output Fields

For this type of benchmark, only the Metadata field needs to be completed. Include information about:

- Labels available for the task.

- The image to be annotated.

Example of Expected Metadata

{ "cvatPayload": "{"payload":{"name":"CVAT task","labels":[{"name":"building","color":"#0000FF","type":"any","attributes":[]},{"name":"roof","color":"#FF0000","type":"any","attributes":[]},{"name":"property","color":"#00FF00","type":"any","attributes":[]}], "images":["https://valid/image/url"]}" }

- Ensure that the task data has been previously created with high quality in the same or a similar pipeline.

- The labels and annotations should emulate a realistic CVAT task.

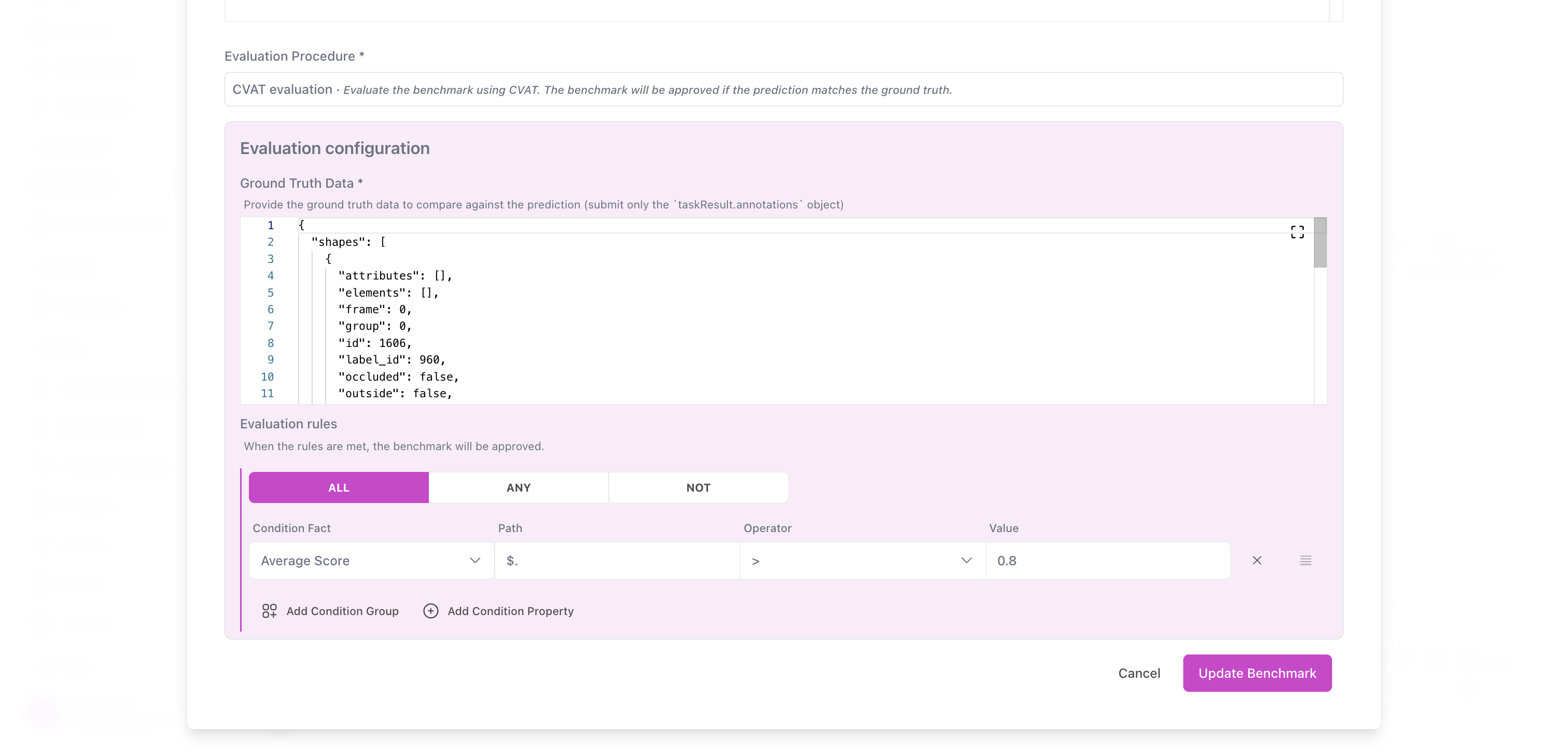

5. Select the Evaluation Procedure

- Choose "CVAT Evaluation" from the available options.

- This will enable the Ground Truth Data field.

6. Fill in Ground Truth Data

- Retrieve the Ground Truth Data from the task with excellent quality.

- Use the information from

output.taskResult.annotations. This object contains all polygon information created in the CVAT tool.

7. Define Evaluation Rules

- Add one or more rules to evaluate the task. These rules help verify whether the polygons created in the task meet expectations.

Rule Configuration

- Condition Fact: Choose what to evaluate, such as:

- User's output

- Intersection over union

- Total matches

- Score distribution

- Path: Use a placeholder like

"$."if no specific path is needed. - Operator: Select from operators such as

>,<,=, or!=. - Value: Enter the expected value for the rule.

Example Rule

{ "conditionFact": "Average score", "path": "$.", "operator": ">", "value": 0.8 }

This rule specifies that the average score for the task must be greater than 0.8 for the task to be considered correct.

Notes

- It is crucial to use a task with high-quality data as the basis for creating benchmarks.

- Benchmarks provide a structured method to evaluate task performance based on specific criteria.